Adam原理及实现

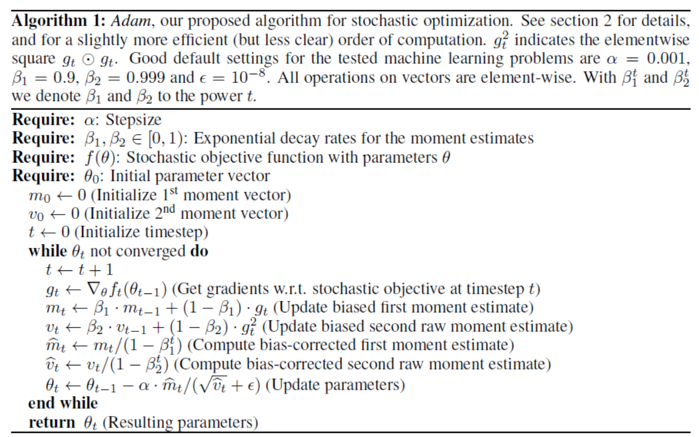

Adam是一种梯度下降的算法,综合考虑了梯度的一阶矩、二阶矩,计算更新步长。 直接上伪代码:

更新规则

计算出梯度 $ g_{t} $

首先,计算梯度的指数移动平均数 $ m_{t} $

$ m_{t} = \beta_{1}m_{t-1} + (1 - \beta_{1}g_{t})$ 其中$m_{0}$初始化为0, $\beta_{1} $为指数衰减率,控制权重分配,通常取0.9

其次,计算梯度平方的指数移动平均数 $v_{t}$

$v_{t} = \beta_{2}v_{t-1} + (1 - \beta_{2}g_{t}^{2})$ 其中$v_{0}$初始化为0, $\beta_{2}$为指数衰减率,控制梯度平方的权重分配,通常取0.999

第三,由于$m_{0}$初始化为0,导致训练初期阶段$m_{t}$偏向0,需要进行偏差纠正, 降低偏差对训练初期的影响

$\hat{m_{t}} = m_{t}/(1 - \beta_{1}^{t})$

第四,与$m_{0}$类似,$v_{0}$也会出现偏差,需要进行纠正

$\hat{v_{t}} = v_{t}/(1 - \beta_{2}^{t})$

最后更新参数,初始学习率乘梯度均值,然后除以梯度方差的平方根

$\theta_{t} = \theta_{t-1} - \alpha * \hat{m_{t}} / (\sqrt{\hat{v_{t}} + \epsilon})$

代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

class Adam:

def __init__(self, loss, weights, lr=0.001, beta1=0.9, beta2=0.999, epislon=1e-8):

self.loss = loss

self.theta = weights

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.epislon = epislon

self.get_gradient = grad(loss)

self.m = 0

self.v = 0

self.t = 0

def minimize_raw(self):

self.t += 1

g = self.get_gradient(self.theta)

self.m = self.beta1 * self.m + (1 - self.beta1) * g

self.v = self.beta2 * self.v + (1 - self.beta2) * (g * g)

self.m_hat = self.m / (1 - self.beta1 ** self.t)

self.v_hat = self.v / (1 - self.beta2 ** self.t)

self.theta -= self.lr * self.m_hat / (self.v_hat ** 0.5 + self.epislon)

def minimize(self):

self.t += 1

g = self.get_gradient(self.theta)

lr = self.lr * (1 - self.beta2 ** self.t) ** 0.5 / (1 - self.beta1 ** self.t)

self.m = self.beta1 * self.m + (1 - self.beta1) * g

self.v = self.beta2 * self.v + (1 - self.beta2) * (g * g)

self.theta -= lr * self.m / (self.v ** 0.5 + self.epislon)

参考自:https://zhuanlan.zhihu.com/p/32698042

This post is licensed under CC BY 4.0 by the author.